GeoWave User Guide

Introduction

Purpose of this Guide

This user guide focuses on the various ways a user can interact with GeoWave without writing code. It covers the Command-Line Interface (CLI), the ingest process, the vector query language, analytics, visibility management, as well as the GeoServer plugin.

Assumptions

This guide assumes that the reader is familiar with the basics of GeoWave discussed in the Overview. It also assumes that GeoWave has already been installed and is available on the command-line. See the Installation Guide for help with the installation process.

External Components

Some commands in this guide are intended to be used alongside external third party components. The following are not required, but the versions supported by GeoWave are listed below. The installation and configuration of these external components is outside the scope of this document.

| Component | Supported Version(s) | |

|---|---|---|

2.14.x |

||

[ 1.7.x, 1.9.x ] |

||

[ 1.1.x, 1.4.x ] |

||

2.x |

||

1.9.2 |

||

5.9 |

Command-Line Interface (CLI)

Overview

The Command-Line Interface provides a way to execute a multitude of common operations on GeoWave data stores without having to use the Programmatic API. It allows users to manage data stores, indices, statistics, and more. While this guide covers the basics of the CLI, the GeoWave CLI Documentation contains an exhaustive overview of each command and their options.

Configuration

The CLI uses a local configuration file to store sets of data store connection parameters aliased by a store name. Most GeoWave commands ask for a store name and use the configuration file to determine which connection parameters should be used. It also stores connection information for GeoServer, AWS, and HDFS for commands that use those services. This configuration file is generally stored in the user’s home directory, although an alternate configuration file can be specified when running commands.

General Usage

The root of all GeoWave CLI commands is the base geowave command.

$ geowaveThis will display a list of all available top-level commands along with a brief description of each.

Version

$ geowave --versionThe --version flag will display various information about the installed version of GeoWave, including the version, build arguments, and revision information.

General Flags

These flags can be optionally supplied to any GeoWave command, and should be supplied before the command itself.

Config File

The --config-file flag causes GeoWave to use an alternate configuration file. The supplied file path should include the file name (e.g. --config-file /mnt/config.properties). This can be useful if you have multiple projects that use GeoWave and want to keep the configuration for those data stores separate from each other.

$ geowave --config-file <path_to_file> <command>Help Command

Adding help before any CLI command will show that command’s options and their defaults.

$ geowave help <command>For example, using the help command on index add would result in the following output:

$ geowave help index add

Usage: geowave index add [options] <store name> <index name>

Options:

-np, --numPartitions

The number of partitions. Default partitions will be 1.

Default: 1

-ps, --partitionStrategy

The partition strategy to use. Default will be none.

Default: NONE

Possible Values: [NONE, HASH, ROUND_ROBIN]

* -t, --type

The type of index, such as spatial, or spatial_temporal

Explain Command

The explain command is similar to the help command in it’s usage, but shows all options, including hidden ones. It can be a great way to make sure your parameters are correct before issuing a command.

$ geowave explain <command>For example, if you wanted to add a spatial index to a store named test-store but weren’t sure what all of the options available to you were, you could do the following:

$ geowave explain index add -t spatial test-store spatial-idx

Command: geowave [options] <subcommand> ...

VALUE NEEDED PARAMETER NAMES

----------------------------------------------

{ } -cf, --config-file,

{ } --debug,

{ } --version,

Command: add [options]

VALUE NEEDED PARAMETER NAMES

----------------------------------------------

{ EPSG:4326} -c, --crs,

{ false} -fp, --fullGeometryPrecision,

{ 7} -gp, --geometryPrecision,

{ 1} -np, --numPartitions,

{ NONE} -ps, --partitionStrategy,

{ false} --storeTime,

{ spatial} -t, --type,

Expects: <store name> <index name>

Specified:

test-store spatial-idx

The output is broken down into two sections. The first section shows all of the options available on the geowave command. If you wanted to use any of these options, they would need to be specified before index add. The second section shows all of the options available on the index add command. Some commands contain options that, when specified, may reveal more options. In this case, the -t spatial option has revealed some additional configuration options that we could apply to the spatial index. Another command where this is useful is the store add command, where each data store type specified by the -t <store_type> option has a different set of configuration options.

Top-Level Commands

The GeoWave CLI is broken up into several top-level commands that each focus on a different aspect of GeoWave.

Store Commands

The store command contains commands for managing the GeoWave data stores. This includes commands to add, remove, and copy data stores.

Index Commands

The index command contains commands for listing, adding, and removing GeoWave indices from a data store.

Type Commands

The type command contains commands for listing, describing, and removing types at a data store level.

Ingest Commands

The ingest command contains commands for ingesting data into a GeoWave data store.

Statistics Commands

The statistics or stat command contains commands for listing, removing, or recalculating statistics.

Analytic Commands

The analytic command contains commands for performing analytics on existing GeoWave datasets. Results of analytic jobs consist of vector or raster data stored in GeoWave.

Vector Commands

The vector command contains commands that are specific to vector data, this includes various export options.

Raster Commands

The raster command contains commands that are specific to raster data, such as resize commands.

Config Commands

The config command contains commands that affect the local GeoWave configuration. This includes commands to configure GeoServer, AWS, and HDFS.

Util Commands

The util command contains a lot of the miscellaneous operations that don’t really warrant their own top-level command. This includes commands to start standalone data stores and services.

Adding Data Stores

In order to start using GeoWave on a key/value store through the CLI, the store must be added to the GeoWave configuration. This is done through the store add command. For example:

$ geowave store add -t rocksdb exampleThis command takes in several options that are specific to the key/value store that is being used. It is important to note that this command does not create any data or make any modifications to the key/value store itself, it simply adds a configuration to GeoWave so that all of the connection parameters required to connect to the store are easily accessible to the CLI and can be referred to in future commands by a simple store name. For an exhaustive list of the configuration options available for each data store type, see the store add documentation.

Adding Indices

Before ingesting any data, an index must be added to GeoWave that understands how the ingested data should be organized in the key/value store. GeoWave provides out-of-the-box implementations for spatial, temporal, and spatial-temporal indices. These indices can be added to a data store through the index add command. For example:

$ geowave index add -t spatial example spatial_idxWhen an index is added to GeoWave, the appropriate data store implementation will create a table in the key/value store for the indexed data, and information about the index will be added to the metadata. Because of this, when one user adds an index to a GeoWave data store, all users that connect to the same data store with the same configuration parameters will be able to see and use the index. All indices that are added to GeoWave are given an index name that can be used by other CLI operations to refer to that index. For more information about adding different types of indices to a data store, see the index add documentation.

Ingesting Data

Overview

In addition to raw data, the ingest process requires an adapter to translate the native data into a format that can be persisted into the data store. It also requires an index to determine how the data should be organized. The index keeps track of which common fields from the source data need to be maintained within the table to be used by fine-grained and secondary filters.

There are various ways to ingest data into a GeoWave store. The standard ingest localToGW command is used to ingest files from a local file system or from an AWS S3 bucket into GeoWave in a single process. For a distributed ingest (recommended for larger datasets) the ingest sparkToGW and ingest mrToGW commands can be used. Ingests can also be performed directly from HDFS or utilizing Kafka.

The full list of GeoWave ingest commands can be found in the GeoWave CLI Documentation.

For an example of the ingest process in action, see the Quickstart Guide.

Ingest Plugins

The CLI contains support for several ingest formats out of the box. You can list the available formats by utilizing the ingest listplugins command.

$ geowave ingest listpluginsThis command lists all of the ingest format plugins that are currently installed and should yield a result similar to the following:

Available ingest formats currently registered as plugins:

twitter:

Flattened compressed files from Twitter API

geotools-vector:

all file-based vector datastores supported within geotools

geolife:

files from Microsoft Research GeoLife trajectory data set

gdelt:

files from Google Ideas GDELT data set

stanag4676:

xml files representing track data that adheres to the schema defined by STANAG-4676

geotools-raster:

all file-based raster formats supported within geotools

gpx:

xml files adhering to the schema of gps exchange format

tdrive:

files from Microsoft Research T-Drive trajectory data set

avro:

This can read an Avro file encoded with the SimpleFeatureCollection schema. This schema is also used by the export tool, so this format handles re-ingesting exported datasets.

Statistics

When ingesting a large amount of data, it can be beneficial to configure the statistics on the data types to be ingested prior to actually ingesting any data in order to avoid having to run full table scans to calculate the initial value of those statistics. This can be done by performing the following steps:

-

Add the data types for the data that will be ingested by using the

type addcommand, which is nearly identical toingest localToGW, but does not ingest any data. If the data that is going to be ingested is not local, this command can still be used as long as there is a local source that matches the schema of the data to be ingested, such as a file with a single feature exported from the full data set. -

Add any number of statistics to those data types by using the

stat addcommand. -

Ingest the data using any of the ingest commands.

Using this method, the initial values of all added statistics will be calculated during the ingest process.

Time Configuration

Sometimes it is necessary to provide additional configuration information for a vector ingest. For example, if you have multiple time fields and need to specify which one should be use for a temporal index. In these cases, the system property SIMPLE_FEATURE_CONFIG_FILE may be assigned to the name of a locally accessible JSON file defining the configuration.

Example

$ GEOWAVE_TOOL_JAVA_OPT="-DSIMPLE_FEATURE_CONFIG_FILE=myconfigfile.json"

$ geowave ingest localtogw ./ingest_data mystore myindex|

If GeoWave was installed using the standalone installer, this property can be supplied to the |

This configuration file serves the following purposes:

-

Selecting which temporal attribute to use in temporal indices.

-

Setting the names of the indices to update in WFS-T transactions via the GeoServer plugin.

The JSON file is made up of a list of configurations. Each configuration is defined by a class name and a set of attributes and are grouped by the vector type name.

Temporal Configuration

Temporal configuration may be necessary if your vector feature type has more than one temporal attribute. The class name for this configuration is org.locationtech.geowave.core.geotime.util.TimeDescriptors$TimeDescriptorConfiguration.

There are three attributes for the temporal configuration:

-

timeName -

startRangeName -

endRangeName

These attributes are associated with the name of a simple feature type attribute that references a time value. To index by a single time attribute, set timeName to the name of the single attribute. To index by a range, set both startRangeName and endRangeName to the names of the simple feature type attributes that define start and end time values.

For example, if you had a feature type named myFeatureTypeName with two time attributes captureTime and processedTime, but wanted to tell GeoWave to use the captureTime attribute for the temporal index, the configuration would look like the following:

{

"configurations": {

"myFeatureTypeName" : [

{

"@class" : "org.locationtech.geowave.core.geotime.util.TimeDescriptors$TimeDescriptorConfiguration",

"timeName":"captureTime",

"startRangeName":null,

"endRangeName":null

}

]

}

}

Primary Index Identifiers

The class org.locationtech.geowave.adapter.vector.index.SimpleFeaturePrimaryIndexConfiguration is used to maintain the configuration of primary indices used for adding or updating simple features via the GeoServer plugin.

Example Configuration

All of the above configurations can be combined into a single configuration file. This would result in a configuration that looks something like the following:

{

"configurations": {

"myFeatureTypeName" : [

{

"@class" : "`org.locationtech.geowave.core.geotime.util.TimeDescriptors$TimeDescriptorConfiguration`",

"startRangeName":null,

"endRangeName":null,

"timeName":"captureTime"

},

{

"@class": "org.locationtech.geowave.adapter.vector.index.SimpleFeaturePrimaryIndexConfiguration",

"indexNames": ["SPATIAL_IDX"]

}

]

}

}

See the Visibility Management section of the appendix for information about visibility management.

Queries

Overview

In order to facilitate querying GeoWave data from the CLI, a basic query language is provided. The idea behind the GeoWave Query Language (GWQL) is to provide a familiar way to easily query, filter, and aggregate data from a GeoWave data store. The query language is similar to SQL, but currently only supports SELECT and DELETE statements. These queries can be executed using the query command.

$ geowave query <store name> "<query>"

The examples below use a hypothetical data store called example with a type called countries. This type contains all of the countries of the world with some additional attributes such as population and year established.

|

SELECT Statement

The SELECT statement can be used to fetch data from a GeoWave data store. It supports column selection, aggregation, filtering, and limiting.

Simple Queries

A standard SELECT statement has the following syntax:

SELECT <attributes> FROM <typeName> [ WHERE <filter> ]In this syntax, attributes can be a comma-delimited list of attributes to select from the type, or * to select all of the attributes. Attributes can also be aliased by using the AS operator. If an attribute or type name has some nonstandard characters, such as -, it can be escaped by surrounding the name in double quotes ("column-name"), backticks (`column-name`), or square brackets ([column-name]).

Aggregation Queries

Aggregations can also be done by using aggregation functions. Aggregation functions usually take an attribute as an argument, however, some aggregation functions work on the whole row as well, in which case * is accepted.

The following table shows the aggregation functions currently available through the query language.

| Aggregation Function | Parameters | Description |

|---|---|---|

COUNT |

Attribute Name or |

If an attribute name is supplied, counts the number of non-null values for that attribute. If |

BBOX |

Geometry Attribute Name or |

If a geometry attribute name is supplied, calculates the bounding box of all non-null geometries under that attribute. If |

SUM |

Numeric Attribute Name |

Calculates the sum of non-null values for the supplied attribute over the result set. |

MIN |

Numeric Attribute Name |

Finds the minimum value of the supplied attribute over the result set. |

MAX |

Numeric Attribute Name |

Finds the maximum value of the supplied attribute over the result set. |

It’s important to note that aggregation queries cannot be mixed with non-aggregated columns. If one of the column selectors has an aggregation function, all of the column selectors need to have an aggregation function.

Examples

SELECT COUNT(*) FROM countriesSELECT SUM(population) FROM countriesSELECT BBOX(*) AS bounds, MIN(population) AS minPop, MAX(population) AS maxPop FROM countriesLimit

It is often the case where not all of the data that matches the query parameters is necessary, in this case we can add a LIMIT to the query to limit the number of results returned. This can be done using the following syntax:

SELECT <attributes> FROM <typeName> [ WHERE <filter> ] LIMIT <count>

While LIMIT can be specified for aggregation queries, it doesn’t often make sense and can produce different results based on the underlying data store implementation.

|

DELETE Statement

The DELETE statement can be used to delete data from a GeoWave data store. It can either delete an entire type, or only data that matches a given filter. It has the following syntax:

DELETE FROM <typeName> [ WHERE <filter> ]| When all of the data of a given type is removed, that type is removed from the data store completely. Additionally, if that data represented the last data in an index, the index will also be removed. |

Filtering

All GWQL queries support filtering through the use of filter expressions. GeoWave supports filtering on many different expression types, each of which have their own supported predicates and functions. Multiple filter expressions can also be combined using AND and OR operators (e.g. a > 10 AND b < 100. Filter expressions can also be inverted by prepending it with NOT (e.g. NOT strContains(name, 'abc'))

In GWQL, function casing is not important; STRCONTAINS(name, 'abc') is equivalent to strContains(name, 'abc').

|

Numeric Expressions

Numeric expressions support all of the standard comparison operators: <, >, ⇐, >=, =, <> (not equal), IS NULL, IS NOT NULL, and BETWEEN … AND …. Additionally the following mathematics operations are supported: +, -, *, /. The operands for any of these operations can be a numeric literal, a numeric attribute, or another numeric expression.

Functions

Numeric expressions support the following functions:

| Function | Parameters | Description |

|---|---|---|

ABS |

Numeric Expression |

Transforms the numeric expression into one that represents the absolute value of the input expression. For example, the literal -64 would become 64. |

Examples

SELECT * FROM countries WHERE population > 100000000malePop and femalePop):SELECT COUNT(*) FROM countries WHERE malePop > femalePopSELECT * FROM countries WHERE population BETWEEN 10000000 AND 20000000SELECT * FROM countries WHERE ABS(femalePop - malePop) > 50000Text Expressions

Text expressions support all of the standard comparison operators: <, >, ⇐, >=, =, <> (not equal), IS NULL, IS NOT NULL, and BETWEEN … AND …. These operators will lexicographically compare the operands to determine if the filter is passed.

Functions

Text expressions support the following functions:

| Function | Parameters | Description |

|---|---|---|

CONCAT |

Text Expression, Text Expression |

Concatenates two text expressions into a single text expression. |

STRSTARTSWITH |

Text Expression, Text Expression [, Boolean] |

A predicate function that returns true when the first text expression starts with the second text expression. A third boolean parameter can also be supplied that will specify whether or not to ignore casing. By default, casing will NOT be ignored. |

STRENDSWITH |

Text Expression, Text Expression [, Boolean] |

A predicate function that returns true when the first text expression ends with the second text expression. A third boolean parameter can also be supplied that will specify whether or not to ignore casing. By default, casing will NOT be ignored. |

STRCONTAINS |

Text Expression, Text Expression [, Boolean] |

A predicate function that returns true when the first text expression contains the second text expression. A third boolean parameter can also be supplied that will specify whether or not to ignore casing. By default, casing will NOT be ignored. |

Examples

SELECT * FROM countries WHERE name > 'm'SELECT COUNT(*) FROM countries WHERE strEndsWith(name, 'stan')SELECT * FROM countries WHERE strContains(name, 'state', true)Temporal Expressions

Temporal expressions support all of the standard comparison operators: <, >, ⇐, >=, =, <> (not equal), IS NULL, IS NOT NULL, and BETWEEN … AND …. Temporal expressions can also be compared using temporal comparison operators: BEFORE, BEFORE_OR_DURING, DURING, DURING_OR_AFTER, and AFTER.

Temporal expressions can represent either a time instant or a time range. An instant in time can be specified as text literals using one of the following date formats: yyyy-MM-dd HH:mm:ssZ, yyyy-MM-dd’T’HH:mm:ss’Z', yyyy-MM-dd, or as a numeric literal representing the epoch milliseconds since January 1, 1970 UTC. A time range can be specified as a text literal by combining two dates separated by a /. For example, a time range of January 8, 2020 at 11:56 AM to February 12, 2020 at 8:20 PM could be defined as '2020-01-08T11:56:00Z/2020-02-12T20:20:00Z'. Time ranges are inclusive on the start date and exclusive on the end date.

If the left operand of a temporal operator is a temporal field (such as Date), then the right operand can be inferred from a numeric or text literal. If the left operand of a temporal expression is a numeric or text literal, it can be cast to a temporal expression using the <expression>::date syntax.

Functions

Temporal expressions support the following functions:

| Function | Parameters | Description |

|---|---|---|

TCONTAINS |

Temporal Expression, Temporal Expression |

A predicate function that returns true if the first temporal expression fully contains the second. |

TOVERLAPS |

Temporal Expression, Temporal Expression |

A predicate function that returns true when the first temporal expression overlaps the second temporal expression at any point |

Examples

SELECT * FROM countries WHERE established AFTER '1750-12-31'SELECT COUNT(*) FROM countries WHERE established DURING '1700-01-01T00:00:00Z/1800-01-01T00:00:00Z'SELECT COUNT(*) FROM countries WHERE dissolution IS NULLSpatial Expressions

Spatial expressions are used to compare geometries. The only comparison operators that are supported are =, <> (not equal), IS NULL and IS NOT NULL. The equality operators will topologically compare the left spatial expression to the right spatial expression. Most comparisons with spatial expressions will be done through one of the provided predicate functions.

Literal spatial expressions can be defined by a well-known text (WKT) string such as 'POINT(1 1)'. If a text literal needs to be explicitly cast as a spatial expression, such as when it is the left operand of an equality check, it can be done using the <expression>::geometry syntax.

Functions

Spatial expressions support the following functions:

| Function | Parameters | Description |

|---|---|---|

BBOX |

Spatial Expression, Min X, Min Y, Max X, Max Y, [, CRS code] |

A predicate function that returns true if the spatial expression intersects the provided bounds. An optional CRS code can be provided if the bounding dimensions are not in the default WGS84 projection. |

BBOXLOOSE |

Spatial Expression, Min X, Min Y, Max X, Max Y, [, CRS code] |

A predicate function that returns true if the spatial expression intersects the provided bounds. An optional CRS code can be provided if the bounding dimensions are not in the default WGS84 projection. This can provide a performance boost over the standard BBOX function at the cost of being overly inclusive with the results. |

INTERSECTS |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression intersects the second spatial expression. |

INTERSECTSLOOSE |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression intersects the second spatial expression. This can provide a performance boost over the standard INTERSECTS function at the cost of being overly inclusive with the results. |

DISJOINT |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression is disjoint (does not intersect) to the second spatial expression. |

DISJOINTLOOSE |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression is disjoint (does not intersect) to the second spatial expression. This can provide a performance boost over the standard INTERSECTS function at the cost of being overly inclusive with the results. |

CROSSES |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression crosses the second spatial expression. |

CROSSES |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression crosses the second spatial expression. |

OVERLAPS |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression overlaps the second spatial expression. |

TOUCHES |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression touches the second spatial expression. |

WITHIN |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression lies completely within the second spatial expression. |

CONTAINS |

Spatial Expression, Spatial Expression |

A predicate function that returns true if the first spatial expression completely contains the second spatial expression. |

Examples

SELECT * FROM countries WHERE BBOX(geom, -10.8, 35.4, 63.3, 71.1)SELECT COUNT(*) FROM countries WHERE INTERSECTS(geom, 'LINESTRING(-9.14 39.5, 3.5 47.9, 20.56 53.12, 52.9 56.36)')Output Formats

By default, the query command outputs all results to the console in a tabular format, however it is often desirable to feed the results of these queries into a format that is usable by other applications. Because of this, the query command supports several output formats, each of which have their own options. The output format can be changed by supplying the -f option on the query.

The following table shows the currently available output formats.

| Format | Options | Description |

|---|---|---|

console |

Paged results are printed to the console. This is the default output format. |

|

csv |

|

Outputs the results to a CSV file specified by the |

shp |

|

Outputs the results to a Shapefile specified by the |

geojson |

|

Outputs the results to a GeoJSON file specified by the |

Examples

$ geowave query example "SELECT * FROM countries"

$ geowave query example "SELECT BBOX(*) AS bounds, MIN(population) AS minPop, MAX(population) AS maxPop FROM countries"

$ geowave query -f csv -o myfile.csv example "SELECT name, population FROM countries"

$ geowave query -f shp -o results.shp example "SELECT * FROM countries WHERE population > 100000000 AND established AFTER '1750-01-01'"

Statistics

Overview

GeoWave statistics are a way to maintain aggregated information about data stored within a data store. They can be useful to avoid having to run aggregation queries over many rows whenever basic information is needed. The statistics system is designed to be as flexible as possible to support a large number of use cases.

Statistic Types

There are three types of statistics in GeoWave:

-

Index Statistics - These statistics are aggregated over every row within an index. These are usually fairly broad as they do not make assumptions about the data types that are stored in the index. Some examples of index statistics used by GeoWave are row range histograms, index metadata, and duplicate entry counts.

-

Data Type Statistics - These statistics are aggregated over every row within a data type. The most common data type statistic is the count statistic, which simply counts the number of entries in a given data type.

-

Field Statistics - These statistics are aggregated over every row within a data type, but are usually calculated from the value of a single field within the data type. Statistics are usually designed to work on specific field types. For example, a numeric mean statistic will calculate the mean value of a field across all rows in the data set.

The list of available statistic types can be discovered by using the stat listtypes command.

Binning Strategies

While the various suppported statistics provide some general capabilities, a lot of the flexibility of the statistics system comes from using statistics with different binning strategies. Binning strategies are a way to split a statistic by some algorithm. For example, a data set with a categorical field such as Color could have a count statistic that is binned by that field. The result would be a statistic that maintains the count of each Color in the entire data set. Any statistic can be combined with any binning strategy for a plethora of possibilities. Multiple different binning strategies can also be combined to provide even more customization.

The list of available binning strategies can be discovered by using the stat listtypes command with the -b command line option.

For a full list of GeoWave statistics commands, including examples of each, see the statistics section of the GeoWave CLI Documentation.

Examples

COUNT statistic to the counties type binned by the state_code field in the example data store:$ geowave stat add example -t COUNT --typeName counties -b FIELD_VALUE --binField state_code

NUMERIC_STATS statistic on the field 'population' binned by the geometry field using Uber’s H3 hex grids at resolution 2 (this will maintain stats such as count, variance, sum, and mean of the populations of counties grouped together in approx. 87K km2 hexagons):$ geowave stat add example -t NUMERIC_STATS --typeName counties --fieldName population -b SPATIAL --binField geometry --type H3 --resolution 2

counties type in the example data store:$ geowave stat list example --typeName counties

example data store:$ geowave stat recalc example --all

Analytics

Overview

Analytics embody algorithms tailored to geospatial data. Most analytics leverage either Hadoop MapReduce or Spark for bulk computation. Results of analytic jobs consist of vector or raster data stored in GeoWave.

GeoWave provides the following algorithms out of the box.

| Name | Description |

|---|---|

KMeans++ |

A K-means implementation to find K centroids over the population of data. A set of preliminary sampling iterations find an optimal value of K and the initial set of K centroids. The algorithm produces K centroids and their associated polygons. Each polygon represents the concave hull containing all features associated with a centroid. The algorithm supports drilling down multiple levels. At each level, the set centroids are determined from the set of features associated the same centroid from the previous level. |

KMeans Jump |

Uses KMeans++ over a range of K, choosing an optimal K using an information theoretic based measurement. |

KMeans Parallel |

A K-means implementation that is performed in parallel. |

KMeans Spark |

A K-means implementation that is performed with Spark ML. |

KDE |

A Kernel Density Estimation implementation that produces a density raster from input vector data. |

KDE Spark |

Executes the KDE implementation using Apache Spark. |

DBScan |

The Density Based Scanner algorithm produces a set of convex polygons for each region meeting density criteria. Density of region is measured by a minimum cardinality of enclosed features within a specified distance from each other. |

Nearest Neighbors |

An infrastructure component that produces all the neighbors of a feature within a specific distance. |

For more information about running each of these analytics, see the GeoWave CLI Documentation.

GeoServer Plugin

GeoServer is a third-party tool that integrates with GeoWave through a plugin that can be added to a GeoServer installation. The plugin can be used to explore both raster and vector data from a GeoWave data store. This section provides an overview for integrating the GeoWave plugin with GeoServer. For full GeoServer documentation and how-to guides, please refer to the official GeoServer documentation.

Installation

There are two ways to obtain the GeoWave GeoServer plugin JAR, the first is to simply download it from the Release JARs section of the downloads page. The second is to package the JAR from the GeoWave source.

The GeoWave GeoServer plugin can be installed by simply dropping the plugin JAR into the WEB-INF/lib directory of GeoServer’s installation and then restarting the web service.

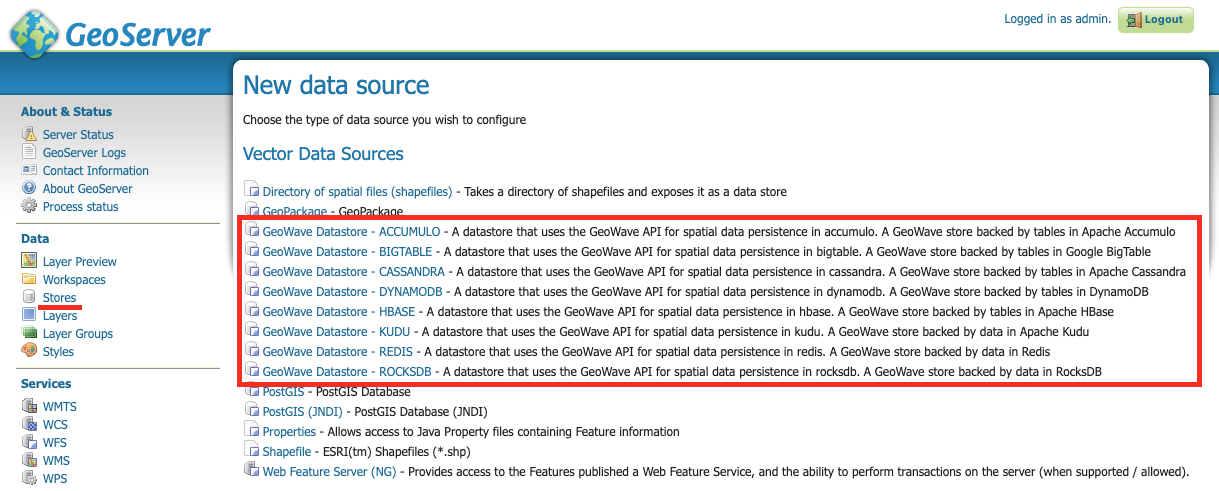

Data Sources

GeoWave data stores are supported by GeoServer through the GeoTools DataStore API. After installing the GeoWave plugin on a GeoServer instance, GeoWave data stores can be configured through the GeoServer web interface by clicking on the Stores link under the Data section of the navigation bar.

When adding a new GeoWave store, several configuration options are available, depending on the type of store being added. For options that are not required, suitable defaults are provided by GeoWave if a value is not supplied. The options available for each store are detailed below.

Common Configuration Options

These options are available for all data store types.

| Name | Description | Constraints |

|---|---|---|

gwNamespace |

The namespace to use for GeoWave data |

|

enableServerSideLibrary |

Whether or not to enable server-side processing if possible |

|

enableSecondaryIndexing |

Whether or not to enable secondary indexing |

|

enableVisibility |

Whether or not to enable visibility filtering |

|

maxRangeDecomposition |

The maximum number of ranges to use when breaking down queries |

|

aggregationMaxRangeDecomposition |

The maximum number of ranges to use when breaking down aggregation queries |

|

Lock Management |

Select one from a list of lock managers |

|

Authorization Management Provider |

Select from a list of providers |

|

Authorization Data URL |

The URL for an external supporting service or configuration file |

The interpretation of the URL depends on the selected provider |

Transaction Buffer Size |

Number of features to buffer before flushing to the data store |

|

Query Index Strategy |

The pluggable query strategy to use for querying GeoWave tables |

Accumulo Data Store Configuration

These options are available for Accumulo data stores.

| Name | Description | Constraints |

|---|---|---|

zookeeper |

Comma-separated list of Zookeeper host and port |

Host and port are separated by a colon (host:port) |

instance |

The Accumulo tablet server’s instance name |

The name matches the one configured in Zookeeper |

user |

The Accumulo user name |

The user should have administrative privileges to add and remove authorized visibility constraints |

password |

Accumulo user’s password |

Bigtable Data Store Configuration

These options are available for Bigtable data stores.

| Name | Description | Constraints |

|---|---|---|

scanCacheSize |

The number of rows passed to each scanner (higher values will enable faster scanners, but will use more memory) |

|

projectId |

The Bigtable project to connect to |

|

instanceId |

The Bigtable instance to connect to |

Cassandra Data Store Configuration

These options are available for Cassandra data stores.

| Name | Description | Constraints |

|---|---|---|

contactPoints |

A single contact point or a comma delimited set of contact points to connect to the Cassandra cluster |

|

batchWriteSize |

The number of inserts in a batch write |

|

durableWrites |

Whether to write to commit log for durability, configured only on creation of new keyspace |

|

replicas |

The number of replicas to use when creating a new keyspace |

DynamoDB Data Store Configuration

These options are available for DynamoDB data stores.

| Name | Description | Constraints |

|---|---|---|

endpoint |

The endpoint to connect to |

Specify either endpoint or region, not both |

region |

The AWS region to use |

Specify either endpoint or region, not both |

initialReadCapacity |

The maximum number of strongly consistent reads consumed per second before throttling occurs |

|

initialWriteCapacity |

The maximum number of writes consumed per second before throttling occurs |

|

maxConnections |

The maximum number of open HTTP(S) connections active at any given time |

|

protocol |

The protocol to use |

|

cacheResponseMetadata |

Whether to cache responses from AWS |

High performance systems can disable this but debugging will be more difficult |

HBase Data Store Configuration

These options are available for HBase data stores.

| Name | Description | Constraints |

|---|---|---|

zookeeper |

Comma-separated list of Zookeeper host and port |

Host and port are separated by a colon (host:port) |

scanCacheSize |

The number of rows passed to each scanner (higher values will enable faster scanners, but will use more memory) |

|

disableVerifyCoprocessors |

Disables coprocessor verification, which ensures that coprocessors have been added to the HBase table prior to executing server-side operations |

|

coprocessorJar |

Path (HDFS URL) to the JAR containing coprocessor classes |

Kudu Data Store Configuration

These options are available for Kudu data stores.

| Name | Description | Constraints |

|---|---|---|

kuduMaster |

A URL for the Kudu master node |

Redis Data Store Configuration

These options are available for Redis data stores.

| Name | Description | Constraints |

|---|---|---|

address |

The address to connect to |

A Redis address such as |

compression |

The type of compression to use on the data |

Can be |

RocksDB Data Store Configuration

These options are available for RocksDB data stores.

| Name | Description | Constraints |

|---|---|---|

dir |

The directory of the RocksDB data store |

|

compactOnWrite |

Whether to compact on every write, if false it will only compact on merge |

|

batchWriteSize |

The size (in records) for each batched write |

Anything less than or equal to 1 will use synchronous single record writes without batching |

GeoServer CLI Configuration

GeoWave can be configured for a GeoServer connection through the config geoserver command.

$ geowave config geoserver <geoserver_url> --user <username> --pass <password>| Argument | Required | Description |

|---|---|---|

--url |

True |

GeoServer URL (for example http://localhost:8080/geoserver), or simply host:port and appropriate assumptions are made |

--username |

True |

GeoServer User |

--password |

True |

GeoServer Password - Refer to the password security section for more details and options |

--workspace |

False |

GeoServer Default Workspace |

GeoWave supports connecting to GeoServer through both HTTP and HTTPS (HTTP + SSL) connections. If connecting to GeoServer through an HTTP connection (e.g., http://localhost:8080/geoserver), the command above is sufficient.

GeoServer SSL Connection Properties

If connecting to GeoServer through a Secure Sockets Layer (SSL) connection over HTTPS (e.g., https://localhost:8443/geoserver), some additional configuration options need to be specified, in order for the system to properly establish the secure connection’s SSL parameters. Depending on the particular SSL configuration through which the GeoServer server is being connected, you will need to specify which parameters are necessary.

|

Not all SSL configuration settings may be necessary, as it depends on the setup of the SSL connection through which GeoServer is hosted. Contact your GeoServer administrator for SSL connection related details. |

| SSL Argument | Description |

|---|---|

--sslKeyManagerAlgorithm |

Specify the algorithm to use for the keystore. |

--sslKeyManagerProvider |

Specify the key manager factory provider. |

--sslKeyPassword |

Specify the password to be used to access the server certificate from the specified keystore file. - Refer to the password security section for more details and options. |

--sslKeyStorePassword |

Specify the password to use to access the keystore file. - Refer to the password security section for more details and options. |

--sslKeyStorePath |

Specify the absolute path to where the keystore file is located on system. The keystore contains the server certificate to be loaded. |

--sslKeyStoreProvider |

Specify the name of the keystore provider to be used for the server certificate. |

--sslKeyStoreType |

The type of keystore file to be used for the server certificate, e.g., JKS (Java KeyStore). |

--sslSecurityProtocol |

Specify the Transport Layer Security (TLS) protocol to use when connecting to the server. By default, the system will use TLS. |

--sslTrustManagerAlgorithm |

Specify the algorithm to use for the truststore. |

--sslTrustManagerProvider |

Specify the trust manager factory provider. |

--sslTrustStorePassword |

Specify the password to use to access the truststore file. - Refer to the password security section for more details and options |

--sslTrustStorePath |

Specify the absolute path to where truststore file is located on system. The truststore file is used to validate client certificates. |

--sslTrustStoreProvider |

Specify the name of the truststore provider to be used for the server certificate. |

--sslTrustStoreType |

Specify the type of key store used for the truststore, e.g., JKS (Java KeyStore). |

WFS-T

Transactions are initiated through a Transaction operatio, that contains inserts, updates, and deletes to features. WFS-T supports feature locks across multiple requests by using a lock request followed by subsequent use of a provided Lock ID. The GeoWave implementation supports transaction isolation. Consistency during a commit is not fully supported. Thus, a failure during a commit of a transaction may leave the affected data in an intermediary state. Some deletions, updates, or insertions may not be processed in such a case. The client application must implement its own compensation logic upon receiving a commit-time error response. Operations on single feature instances are atomic.

Inserted features are buffered prior to commit. The features are bulk fed to the data store when the buffer size is exceeded and when the transaction is committed. In support of atomicity and isolation, prior to commit, flushed features are marked in a transient state and are only visible to the controlling transaction. Upon commit, these features are 'unmarked'. The overhead incurred by this operation is avoided by increasing the buffer size to avoid pre-commit flushes.

Lock Management

Lock management supports life-limited locks on feature instances. The only supported lock manager is in-memory, which is suitable for single Geoserver instance installations.

Index Selection

Data written through WFS-T is indexed within a single index. When writing data, the adapter inspects existing indices and finds the index that best matches the input data. A spatial-temporal index is chosen for features with temporal attributes. If no suitable index can be found, a spatial index will be created. A spatial-temporal index will not be automatically created, even if the feature type contains a temporal attribute as spatial-temporal indices can have reduced performance on queries requesting data over large spans of time.

Security

Authorization Management

Authorization Management determines the set of authorizations to supply to GeoWave queries to be compared against the visibility expressions attached to GeoWave data.

The provided implementations include the following:

-

Empty - Each request is processed without additional authorization.

-

JSON - The requester user name, extracted from the Security Context, is used as a key to find the user’s set of authorizations from a JSON file. The location of the JSON file is determined by the associated Authorization Data URL (e.g., /opt/config/auth.json). An example of the contents of the JSON file is given below.

{

"authorizationSet": {

"fred" : ["1","2","3"],

"barney" : ["a"]

}

}In this example, the user fred has three authorization labels. The user barney has just one.

Additional authorization management strategies can be registered through the Java Service Provider Interface (SPI) model by implementing the AuthorizationFactorySPI interface. For more information on using SPI, see the Oracle documentation.

|

Appendices

Migrating Data to Newer Versions

When a major change is made to the GeoWave codebase that alters the serialization of data in a data store, a migration will need to be performed to make the data store compatible with the latest version of the programmatic API and the command-line tools. Beginning in GeoWave 2.0, attempting to access a data store with an incompatible version of the CLI will propmpt the user with an error. If the data store version is later than that of the CLI, it will ask that the CLI version be updated to a compatible version. If the data store version is older, it will prompt the user to run the migration command to perform any updates needed to make the data store compatible with the CLI version. Performing this migration allows you to avoid a potentially costly re-ingest of your data.

For more information about the migration command, see the util migrate documentation.

Configuring Accumulo for GeoWave

Overview

The two high level tasks to configure Accumulo for use with GeoWave are to:

-

Ensure the memory allocations for the master and tablet server processes are adequate.

-

Add the GeoWave libraries to the Accumulo classpath. The libraries are rather large, so ensure the Accumulo Master process has at least 512m of heap space and the Tablet Server processes have at least 1g of heap space.

The recommended Accumulo configuration for GeoWave requires several manual configuration steps, but isolates the GeoWave libraries to application specific classpath(s). This reduces the possibility of dependency conflict issues. You should ensure that each namespace containing GeoWave tables is configured to pick up the GeoWave Accumulo JAR on the classpath.

Procedure

-

Create a user and namespace.

-

Grant the user ownership permissions on all tables created within the application namespace.

-

Create an application or data set specific classpath.

-

Configure all tables within the namespace to use the application classpath.

accumulo shell -u root

createuser geowave (1)

createnamespace geowave

grant NameSpace.CREATE_TABLE -ns geowave -u geowave (2)

config -s general.vfs.context.classpath.geowave=hdfs://${MASTER_FQDN}:8020/${ACCUMULO_ROOT}/lib/[^.].*.jar (3)

config -ns geowave -s table.classpath.context=geowave (4)

exit| 1 | You’ll be prompted for a password. |

| 2 | Ensure the user has ownership of all tables created within the namespace. |

| 3 | The Accumulo root path in HDFS varies between hadoop vendors. For Apache and Cloudera it is '/accumulo' and for Hortonworks it is '/apps/accumulo' |

| 4 | Link the namespace with the application classpath. Adjust the labels as needed if you’ve used different user or application names |

These manual configuration steps have to be performed before attempting to create GeoWave index tables. After the initial configuration, you may elect to do further user and namespace creation and configuring to provide isolation between groups and data sets.

Managing

After installing a number of different iterators, you may want to figure out which iterators have been configured.

# Print all configuration and grep for line containing vfs.context configuration and also show the following line

accumulo shell -u root -p ROOT_PWD -e "config -np" | grep -A 1 general.vfs.context.classpathYou will get back a listing of context classpath override configurations that map the application or user context you configured to a specific iterator JAR in HDFS.

Versioning

It’s of critical importance to ensure that the various GeoWave components are all the same version and that your client is of the same version that was used to write the data.

Basic

The RPM packaged version of GeoWave puts a timestamp in the name so it’s pretty easy to verify that you have a matched set of RPMs installed. After an update of the components, you must restart Accumulo to get vfs to download the new versions and this should keep everything synched.

[geowaveuser@c1-master ~]$ rpm -qa | grep geowave

geowave-2.0.2-SNAPSHOT-apache-core-2.0.2-SNAPSHOT-201602012009.noarch

geowave-2.0.2-SNAPSHOT-apache-jetty-2.0.2-SNAPSHOT-201602012009.noarch

geowave-2.0.2-SNAPSHOT-apache-accumulo-2.0.2-SNAPSHOT-201602012009.noarch

geowave-2.0.2-SNAPSHOT-apache-tools-2.0.2-SNAPSHOT-201602012009.noarchAdvanced

When GeoWave tables are first accessed on a tablet server, the vfs classpath tells Accumulo where to download the JAR file from HDFS.

The JAR file is copied into the local /tmp directory (the default general.vfs.cache.dir setting) and loaded onto the classpath.

If there is ever doubt as to if these versions match, you can use the commands below from a tablet server node to verify the version of

this artifact.

sudo -u hdfs hadoop fs -cat /accumulo/classpath/geowave/geowave-accumulo-build.properties | grep scm.revision | sed s/project.scm.revision=(1)| 1 | The root directory of Accumulo can vary by distribution, so check with hadoop fs -ls / first to ensure you have the correct initial path. |

sudo find /tmp -name "*geowave-accumulo.jar" -exec unzip -p {} build.properties \; | grep scm.revision | sed s/project.scm.revision=//[spohnae@c1-node-03 ~]$ sudo -u hdfs hadoop fs -cat /${ACCUMULO_ROOT}/lib/geowave-accumulo-build.properties | grep scm.revision | sed s/project.scm.revision=//

294ffb267e6691de3b9edc80e312bf5af7b2d23f (1)

[spohnae@c1-node-03 ~]$ sudo find /tmp -name "*geowave-accumulo.jar" -exec unzip -p {} build.properties \; | grep scm.revision | sed s/project.scm.revision=//

294ffb267e6691de3b9edc80e312bf5af7b2d23f (2)

294ffb267e6691de3b9edc80e312bf5af7b2d23f (2)

25cf0f895bd0318ce4071a4680d6dd85e0b34f6b| 1 | This is the version loaded into HDFS and should be present on all tablet servers once Accumulo has been restarted. |

| 2 | The find command will probably locate a number of different versions depending on how often you clean out /tmp. |

There may be multiple versions present - one per JVM. An error will occur if a tablet server is missing the correct JAR.

Visibility Management

Overview

When data is written to GeoWave, it may contain visibility constraints. By default, the visibility expression attached to each attribute is empty, which means that the data is visible regardless of which authorizations are present. If a visibility expression is set for an entry, only queries that supply the appropriate authorizations will be able to see it.

Visibility can be configured on a type by utilizing one or more of the visibility options during ingest or when adding a new type via the type add command. These options allow the user to specify the visibility of each field individually, or specify a field in their type that defines visibility information. One complex example of this would be having a type that contains a field with visibility information in JSON format. Each name/value pair within the JSON structure defines the visibility for the associated attribute. In the following example, the geometry attribute is given a visibility S and the eventName attribute is given a visibility TS. This means that a user with an authorization set of ["S","TS"] would be able to see both attributes, while a user with only ["S"] would only be able to see the geometry attribute.

{ "geometry" : "S", "eventName": "TS" }JSON attributes can be regular expressions matching more than one feature property name. In the example, all attributes except for those that start with geo have visibility TS.

{ "geo.*" : "S", ".*" : "TS" }The order of the name/value pairs must be considered if one rule is more general than another, as shown in the example. The rule .* matches all properties. The more specific rule geo.* must be ordered first.

For more information about other ways to configure visibility for a type, see the type add CLI documentation.

Visibility Expressions

It is sometimes necessary to provide more complex visibility constraints on a particular attribute, such as allowing two different authorizations to have view permissions. GeoWave handles this by using visibility expressions. These expressions support AND and OR operations through the symbols & and |. It also supports parentheses for situations where more complex expressions are required.

Examples

A and B authorizations to see the data:A|B

A and B authorizations are provided:A&B

A and B are provided, but also if only C is provided:(A&B)|C

A and one of B or C are provided:A&(B|C)

GeoWave Security

Data Store Passwords

In order to provide security around account passwords, particularly those entered through command-line, GeoWave is configured to perform encryption on password fields that are configured for data stores or other configured components. To take the topic of passwords even further, GeoWave has also been updated to support multiple options around how to pass in passwords when configuring a new data store, rather than always having to enter passwords in clear-text on the command-line.

Password Options

-

pass:<password>

-

This option will allow for a clear-text password to be entered on command-line. It is strongly encouraged not to use this method outside of a local development environment (i.e., NOT in a production environment or where concurrent users are sharing the same system).

-

-

env:<environment variable containing the password>

-

This option will allow for an environment variable to be used to store the password, and the name of the environment variable to be entered on command-line in place of the password itself.

-

-

file:<path to local file containing the password>

-

This option will allow for the password to be inside a locally-accessible text file, and the path to file to be entered on command-line in place of the password itself. Please note that the password itself is the ONLY content to be stored in the file as this option will read all content from the file and store that as the password.

-

-

propfile:<path to local properties file containing the password>:<property file key to password value>

-

This option will allow for the password to be stored inside a locally-accessible properties file, and the key that stores the password field to be also specified. The value associated with the specified key will be looked up and stored as the password.

-

-

stdin

-

This option will result in the user being prompted after hitting enter, and will prevent the entered value from appearing in terminal history.

-

|

Users can still continue to enter their password in plain text at command-line (just as was done with previous versions of GeoWave), but it is strongly encouraged not to do so outside of a local development environment (i.e., NOT in a production environment or where concurrent users are sharing the same system). |

Password Encryption

Passwords are encrypted within GeoWave using a local encryption token key. This key should not be manipulated manually, as doing so may compromise the ability to encrypt new data or decrypt existing data.

In the event that the encryption token key is compromised, or thought to be compromised, a new token key can very easily be generated using a GeoWave command.

$ geowave config newcryptokeyThe above command will re-encrypt all passwords already configured against the new token key. As a result, the previous token key is obsolete and can no longer be used.

|

This option is only useful to counter the event that only the token key file is compromised. In the event that both the token key file and encrypted password value have been compromised, it is recommended that steps are taken to change the data store password and re-configure GeoWave to use the new password. |

Configuring Console Echo

When the 'stdin' option is specified for passwords to be entered at command-line, it is recognized that there are circumstances where the console echo is wanted to be enabled (i.e., someone looking over your shoulder), and other times where the console echo is wanted to be disabled.

For configuring the default console echo setting:

$ geowave config set geowave.console.default.echo.enabled={true|false}The above command will set the default setting for all console prompts. Default is false if not specified, meaning any characters that are typed (when console echo is disabled) are not shown on the screen.

GeoWave provides the ability to override the console echo setting for passwords specifically. For configuring the password console echo setting:

$ geowave config set geowave.console.password.echo.enabled={true|false}If the above is specified, this setting will be applied for passwords when a user is promoted for input. By default, if the passwords console echo is not specified, the system will use the console default echo setting.

Enabling/Disabling Password Encryption

GeoWave provides the ability to enable or disable password encryption as it is seen necessary. By default, password encryption is enabled, but can be disabled for debugging purposes. For configuring the password encryption enabled setting:

$ geowave config set geowave.encryption.enabled={true|false}|

Disabling password encryption is HIGHLY discouraged, particularly in a production (or similar) environment. While this option is available for assisting with debugging credentials, it should be avoided in production-like environments to avoid leaking credentials to unauthorized parties. |

Puppet

Overview

A GeoWave Puppet module has been provided as part of both the tar.gz archive bundle and as an RPM. This module can be used to install the various GeoWave services onto separate nodes in a cluster or all onto a single node for development.

There are a couple of different RPM repo settings that may need to be provided. As the repo is disabled by default to avoid picking up new Accumulo iterator JARs without coordinating a service restart, there is likely some customization required for a particular use case. Class parameters are intended to be overridden to provide extensibility.

Options

- geowave_version

-

The desired version of GeoWave to install, ex: '2.0.2-SNAPSHOT'. We support concurrent installs but only one will be active at a time.

- hadoop_vendor_version

-

The Hadoop framework vendor and version against which GeoWave was built. Examples would be cdh5 or hdp2. Check the available packages for currently supported Hadoop distributions.

- install_accumulo

-

Install the GeoWave Accumulo Iterator on this node and upload it into HDFS. This node must have a working HDFS client.

- install_app

-

Install the GeoWave ingest utility on this node. This node must have a working HDFS client.

- install_app_server

-

Install Jetty with Geoserver and GeoWave plugin on this node.

- http_port

-

The port on which the Tomcat application server will run - defaults to 8080.

- repo_base_url

-

Used with the optional geowave::repo class to point the local package management system at a source for GeoWave RPMs. The default location is http://s3.amazonaws.com/geowave-rpms/release/noarch/.

- repo_enabled

-

To pick up an updated Accumulo iterator you’ll need to restart the Accumulo service. We don’t want to pick up new RPMs with something like a yum-cron job without coordinating a restart so the repo is disabled by default.

- repo_refresh_md

-

The number of seconds before checking for new RPMs. On a production system the default of every 6 hours should be sufficient, but you can lower this down to 0 for a development system on which you wish to pick up new packages as soon as they are made available.

Examples

Development

Install everything on a one-node development system. Use the GeoWave Development RPM Repo and force a check for new RPMs with every pull (don’t use cached metadata).

# Dev VM

class { 'geowave::repo':

repo_enabled => 1,

repo_refresh_md => 0,

} ->

class { 'geowave':

geowave_version => '2.0.2-SNAPSHOT',

hadoop_vendor_version => 'apache',

install_accumulo => true,

install_app => true,

install_app_server => true,

}Clustered

Run the application server on a different node. Use a locally maintained rpm repo vs. the one available on the Internet and run the app server on an alternate port, so as not to conflict with another service running on that host.

# Master Node

node 'c1-master' {

class { 'geowave::repo':

repo_base_url => 'http://my-local-rpm-repo/geowave-rpms/dev/noarch/',

repo_enabled => 1,

} ->

class { 'geowave':

geowave_version => '2.0.2-SNAPSHOT',

hadoop_vendor_version => 'apache',

install_accumulo => true,

install_app => true,

}

}

# App server node

node 'c1-app-01' {

class { 'geowave::repo':

repo_base_url => 'http://my-local-rpm-repo/geowave-rpms/dev/noarch/',

repo_enabled => 1,

} ->

class { 'geowave':

geowave_version => '2.0.2-SNAPSHOT',

hadoop_vendor_version => 'apache',

install_app_server => true,

http_port => '8888',

}

}Puppet script management

As mentioned in the overview, the scripts are available from within the GeoWave source tar bundle (Search for gz to filter the list). You could also use the RPM package to install and pick up future updates on your puppet server.

Source Archive

Unzip the source archive, locate puppet-scripts.tar.gz, and manage the scripts yourself on your Puppet Server.

RPM

There’s a bit of a boostrap issue when first configuring the Puppet server to use the GeoWave puppet RPM as yum won’t know about the RPM Repo and the GeoWave Repo Puppet class hasn’t been installed yet. There is an RPM available that will set up the yum repo config after which you should install geowave-puppet manually and proceed to configure GeoWave on the rest of the cluster using Puppet.

rpm -Uvh http://s3.amazonaws.com/geowave-rpms/release/noarch/geowave-repo-1.0-3.noarch.rpm

yum --enablerepo=geowave install geowave-puppet